Building the perfect CMDB with System Centre Part 2

High Level Design Look - Painting the picture of how it would look on various levels

Recap of previous part

To see the first part of this series you can view it right here

We spoke about how to go through the analysis of all of the toolsets in which we have within our estate which we are able to either pull CMDB data from and for some which may perhaps need an alternative way to connect to it to pull data from which can range from bespoke tools all the way to legacy based systems.

Introduction to Part 2

This part will now cover more of the HLD (high level design) of how the overall CMDB solution would look which we will have multiple scenarios all accounted for. Also we will dive a little into the integrations with other toolsets which also carry a CMDB role to them in where perhaps a synchronous or asynchronous relationships can be made to each other.

These should hopefully cover most kind of setups and environments in which we allow room for scaling up the reach of the CMDB connections across all toolsets which maybe available.

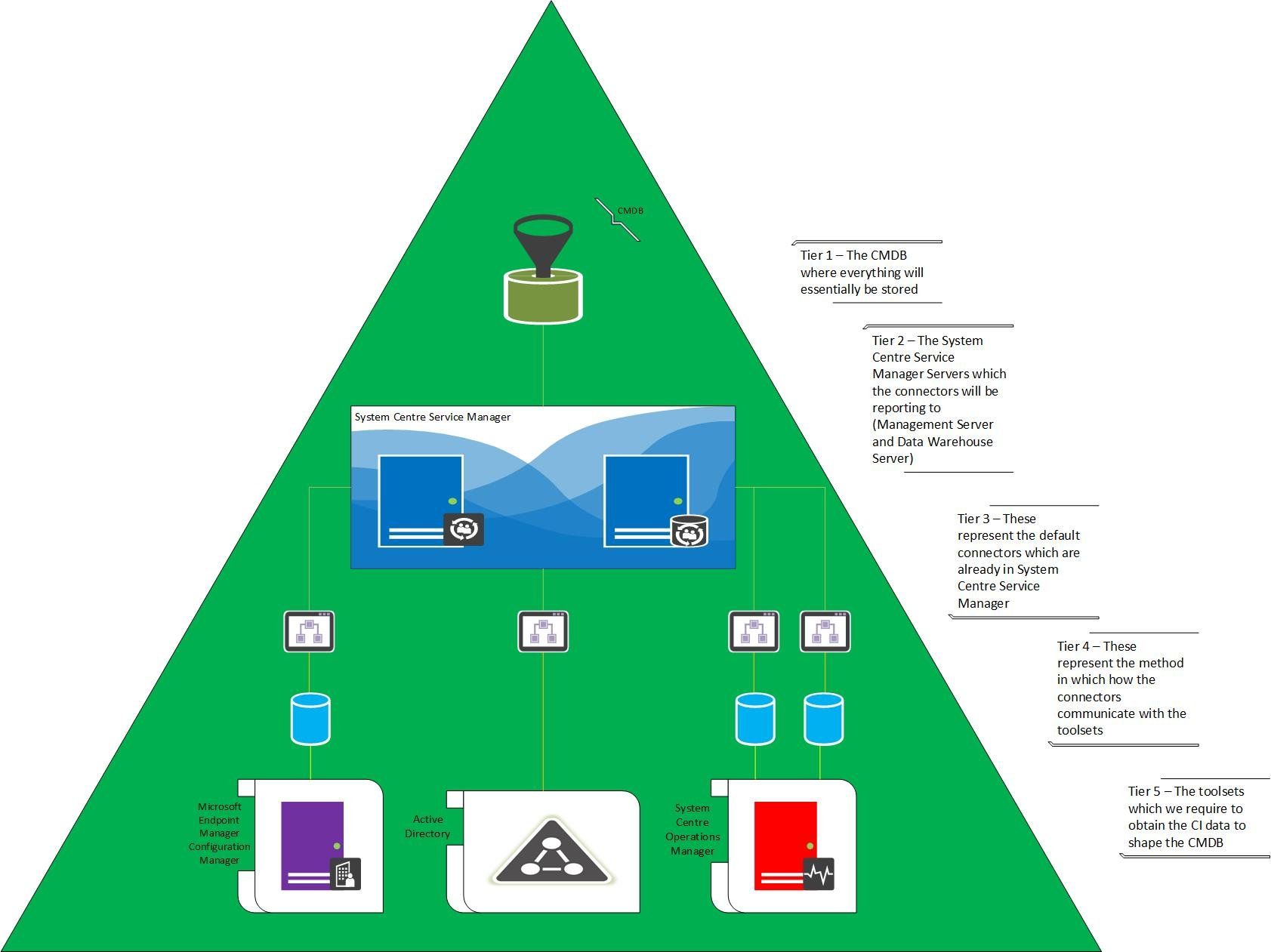

Scenario 1 - Design of CMDB with default connectors

Here we have which would be an out of box setup which you would be able to utilise from System Centre Service Manager which already contains the following connectors by default being;

- Active Directory

- Microsoft Endpoint Manager Configuration Manager

- System Centre Operations Manager (CI and Alert Connectors respectively)

This would be considered a base CMDB setup which should be able to account for everything such as all computer objects as well as hardware/software inventory on the perspective that all CIs are indeed managed and maintained across the main System Centre products as well as the backbone of your infrastructure being Active Directory.

The connectors for both the SCCM/MEMCM and also the SCOM all connect to the database servers which hold the operational databases for each tool respectively which then feed into the connectors then populates the Service Manager with the CI information which in turn populates the CMDB.

Active Directory on the other hand would connect through LDAP to the domain/forest which you choose to pull from which feeds into its base connector within Service Manager.

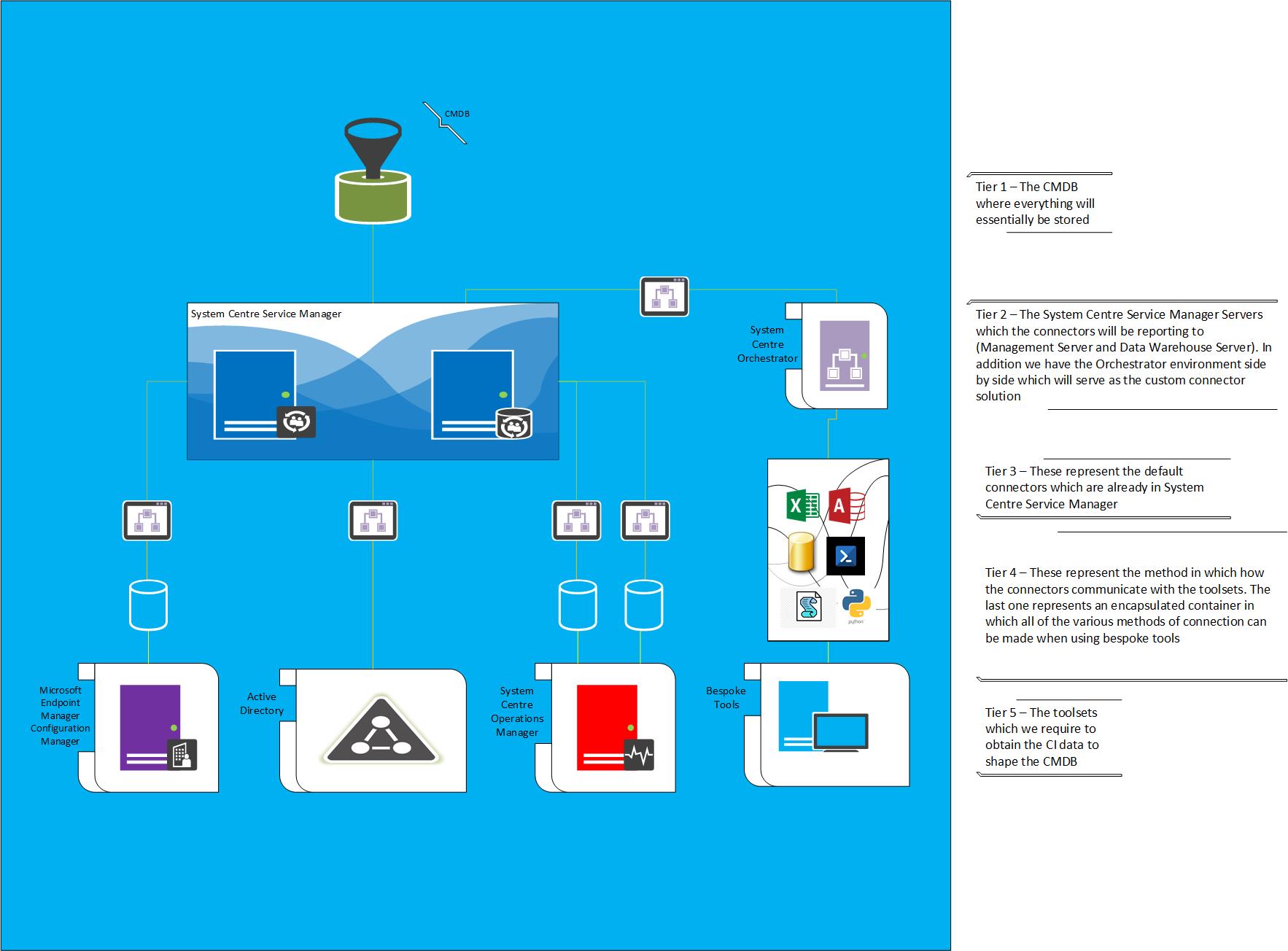

Scenario 2 - Design of CMDB with connections to all toolsets

In this scenario is where we promote more of the scalability within our CMDB solution, where we are now able to broaden the scope to every single toolset that we have on a global scope.

Very similar structure to the previous Scenario however we have now introduced another piece to the solution which will help with the scalability of reaching the toolsets.

If we look at Tier 5 there is an object which would represent all "Bespoke Tools" which is my way of perhaps grouping not only solutions which may be developed in house, but there may also be Microsoft based Products in which don't perhaps have a default connector such as Exchange (there is one for SMTP channels), Sharepoint and other various products.

So when we look at the next tier up we see a picture of multiple products, these represent a good example of the type of methods which would be used to pull data into Service Manager such as;

- Excel - .CSV, XLSX

- Access - Access Database Files

- PowerShell - Connection via PowerShell Scripting + SDK integration

- VBScript - Utilising a VBScript for perhaps more legacy based toolsets

- Python - More programming scripting which maybe able to interrogate much further as well as another alternative

- SQL Database - Though this is used as a somewhat default for the connectors which already come with Service Manager, other toolsets wouldn't have a direct plugin so a custom SQL query or ODBC connection maybe required for this.

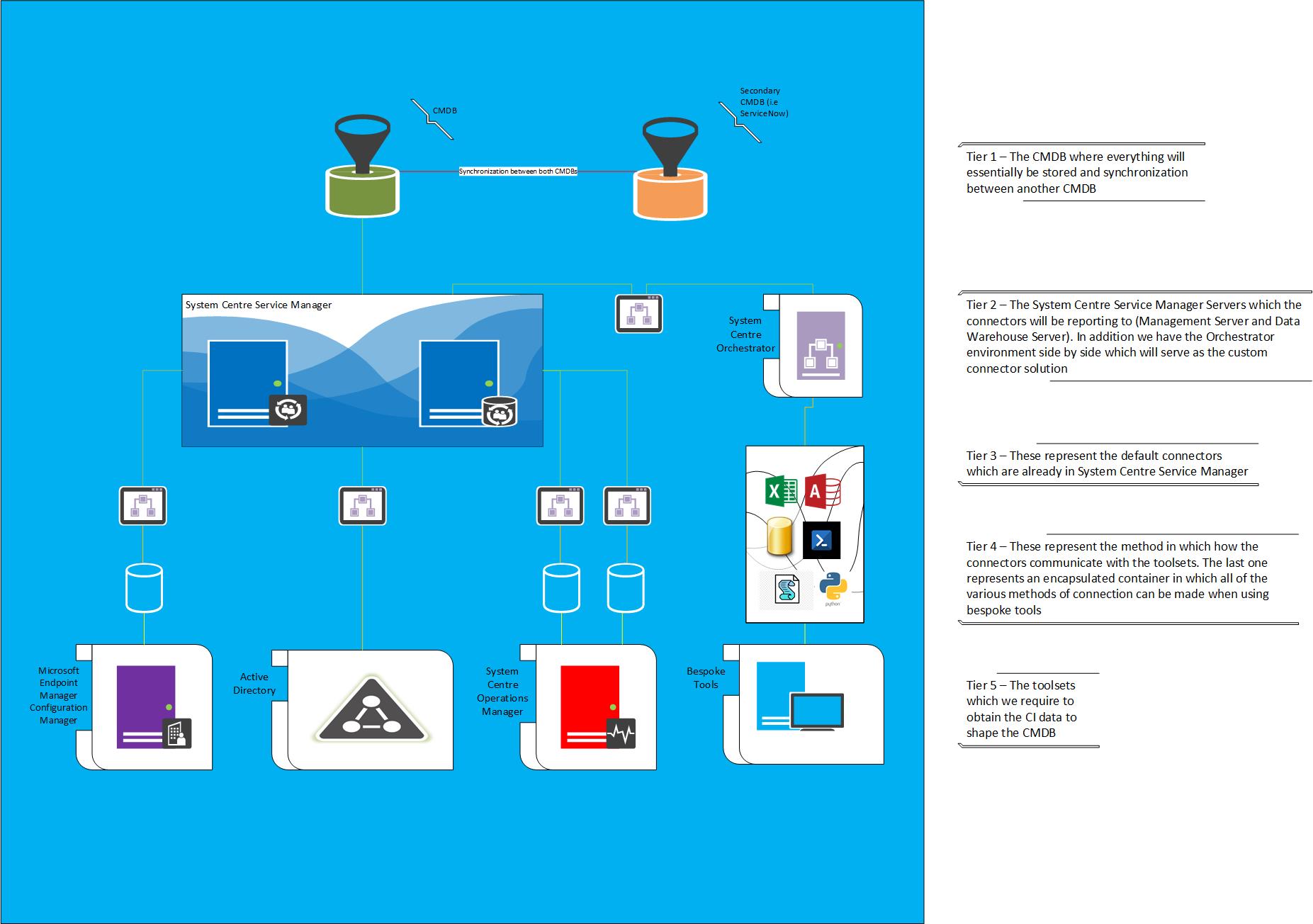

Scenario 3 - Design of CMDB with all toolsets with Integration to another CMDB

Not necessarily a popular scenario or setup as such but is indeed a means to keep an automated CMDB in one space which can then synchronize to another.

Again this scenario is very similar to the 2nd however we are detailing a high level design of a fully automated solution of the CMDB which may not be the primary technology which is used for all Service Management as many organisations may use a technology such as ServiceNow. And with the power of ServiceNow you may not necessarily have a connection point from many products to go direct into ServiceNow very easily, though there are products such as SCCM/MEMCM which can have a connector to ServiceNow as well as SCOM with tools around Event Management and even others such as Evanios.

This scenario provides an idea of how it can be done, with that being said this would be more off scope as to where the series will be focusing more building the actual workflow within System Centre primarily. But at least with this series once your CMDB automation solution is built you can indeed go that route to expand across. Kelverion Integration packs are one of a few solutions which can provide a connection between both for example.

Overview of all Scenarios - Target Scenario

Overall we have analysed the tools in which we have, and now we have laid out a high level design on what we feel that design will look like. Scenario 1 is ideally where we want to start as a foundation but we know in a real world scenario other tools would fall by the waist side with this setup so we want to allow room to scale to capture everything in Scenario 2.

With all the toolsets we have I would summarise it as with the tools we dont have connectors for we simply create a custom one to bring them altogether. Whilst with the others which may already have a default connector we simply need to enrich and prepare for quality control before and after setting up successful connector synchronization.

Next on Part 3

Part 3 will then focus on more of the low level design of how we establish the connectors to products which already contain one, and will also look at all of the best practices and ways on how to enrich and place a quality control for the integrity of everything being synchronized into Service Manager so we don't create an issue of out of date or orphaned CI records.